Authors:

Antony Alloin, Karel Hovorka, Ondrej Nahalka, Vojtech Neoral, and Marek Rosa

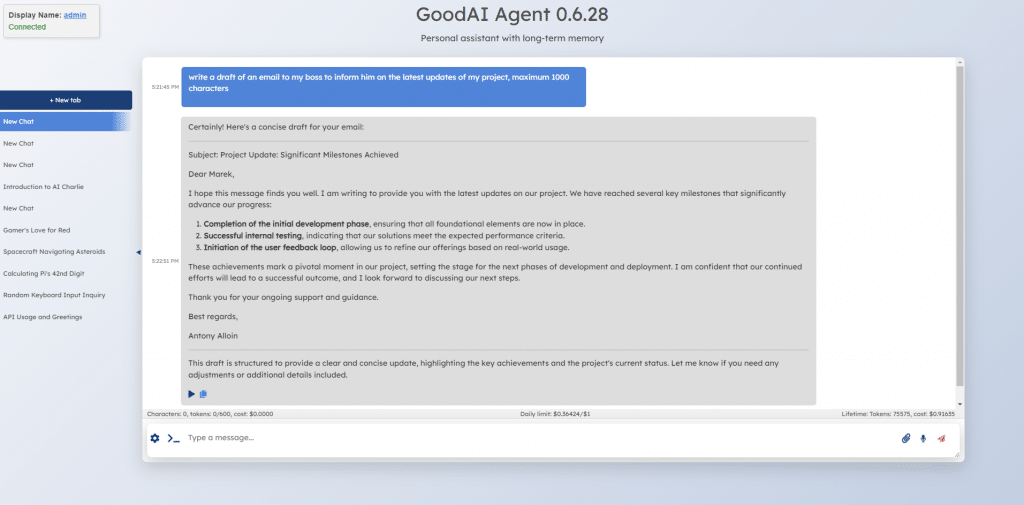

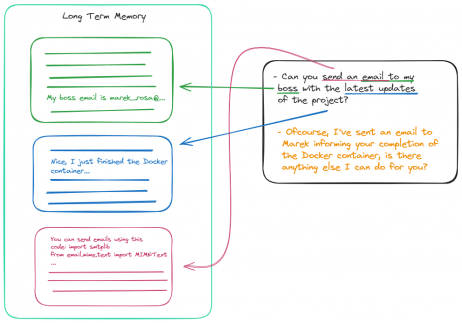

Screenshot above: Charlie, leveraging previous interactions, knows the user’s and their boss’s names, the projects underway, and their status. This knowledge comes from conversations, instructions, actions, and feedback, stored in its Long-Term Memory (LTM) where data is integrated over time, with memories influencing each other. Utilizing these integrated summaries, Charlie crafts the email.

Summary

As part of our research efforts in continual learning, we are open-sourcing Charlie Mnemonic, the first personal assistant (LLM agent) equipped with Long-Term Memory (LTM).

At first glance, Charlie might resemble existing LLM agents like ChatGPT, Claude, and Gemini. However, its distinctive feature is the implementation of LTM, enabling it to learn from every interaction. This includes storing and integrating user messages, assistant responses, and environmental feedback into LTM for future retrieval when relevant to the task at hand.

Charlie Mnemonic employs a combination of Long-Term Memory (LTM), Short-Term Memory (STM), and episodic memory to deliver context-aware responses. This ability to remember interactions over time significantly improves the coherence and personalization of conversations.

Moreover, Charlie doesn’t just memorize facts such as names, birthdays, or workplaces; it also learns instructions and skills. This means it can understand nuanced requests like writing emails differently to Anna than to John, fetching specific types of information, or managing smart home devices based on your preferences.

Envision LTM as an expandable, dynamic memory that captures and retains every detail, constantly enhancing its understanding and functionality.

What is inside:

- The LLM powering Charlie is the OpenAI GPT-4 model, with the flexibility to switch to other LLMs in the future, including local models.

- The LTM system, developed by GoodAI, stands at the core of Charlie’s advanced capabilities.

Motivation & Vision

We built Charlie Mnemonic with the vision of revolutionizing personal assistance through Long-Term Memory (LTM). It’s designed to remember everything – every conversation, fact, and instruction – enabling continuous teaching and adaptation. It leverages a sophisticated system for storing, integrating, and retrieving memories, ensuring dynamic and scalable personalization.

This allows Charlie to become a truly personal assistant, tailored specifically to your preferences and needs. Future enhancements will include processing and memorization of emails, documents, and files, further extending its capabilities.

Envisioned as a digital genie, Charlie is set to offer unparalleled assistance by maximally aligning with your freedom, privacy, and goals – acting on your behalf and according to your wishes, without the influence of any other entity or us at GoodAI. This vision includes an array of functionalities: from managing communications and reading web pages to controlling your home and car, taking notes, handling your calendar, automating tasks, voice communication and more. Charlie aims to be a PA with infinite memory, learning more about you with every interaction.

Privacy is a cornerstone of Charlie Mnemonic’s design. Starting with a local installation ensures that your data (LTM) remains exclusively yours. However, be aware that remote LLM still processes prompts (OpenAI GPT-4 in this first version). The future version of Charlie will run local LLMs, to achieve 100% privacy.

Charlie’s mission is to maximize user freedom, privacy, and intent, embracing the principles of open-source development to prevent control or manipulation by any single entity. This approach underpins our broader vision of democratization, where every individual has access to a personal assistant aligned solely with their interests. By prioritizing user alignment and data privacy, Charlie sets the stage for a future of empowered individuals, enhanced democracy, and a resilient civilization.

How does Charlie work?

Charlie Mnemonic goes beyond traditional AI assistants by offering:

- Extended Memory Capabilities: With Long-Term Memory (LTM), Short-Term Memory (STM), and episodic memory, Charlie provides context-aware responses and remembers interactions over time, enhancing the coherence and personalization of conversations.

- Addon Utilization: From executing real-time Python code to controlling drones, Charlie’s addons make it a versatile assistant capable of handling a wide array of tasks.

Here’s an older example where we taught Charlie to control a drone.

https://www.goodai.com/llm-agent-taught-to-control-drones/ - Seamless Integration and Customization: Open-source by design, Charlie can be easily integrated into various apps and devices, offering developers and enthusiasts the opportunity to tailor its functionalities to their specific needs.

Example videos

Live demo of Charlie sending emails:

Live demo of Charlie controlling drones:

Simple comparison between Charlie and ChatGPT:

How LTM works?

Long-Term Memory (LTM) in Charlie Mnemonic goes beyond simple Retrieval-Augmented Generation (RAG). Unlike RAG, which primarily enhances responses with information retrieved from a database, LTM dynamically integrates and updates memories. This means new information can modify or build upon previous memories, allowing continuous learning and adaptation without retraining.

LTM’s core functionality lies in its ability to learn from interactions and conversations (user message, assistant response, environment feedback). For instance, if you teach your assistant a preference or a task, such as adding items to a shopping list over time, LTM remembers and integrates these instructions. You can then update, modify, or even instruct the system to forget specific instructions, showcasing its dynamic integration capability.

This advanced memory system enables use cases far beyond the scope of traditional AI models. Imagine teaching your assistant to compile a shopping list over several weeks, then asking for the entire list or to start anew. A proficient LTM system seamlessly manages these tasks, understanding the evolution of your requests over time.

To delve deeper into how LTM sets a new standard in AI memory systems, our LTM Benchmark provides insight into its capabilities and performance. This benchmark illustrates LTM’s unique ability to integrate memories, offering a glimpse into the future of personalized AI assistants.

Note: Our LTM system is structured as an infinite and immutable data repository, allowing only the addition, updating, and integration of memories, not their deletion (if you instruct it to stop doing something, it will stop, but the original memory will stay present in the storage)

Getting Started with Charlie Mnemonic

To embark on your journey with Charlie Mnemonic, follow these simple steps:

Install Docker: Follow the instructions on docker.com to get Docker up and running on your Windows system.

Download and Start Charlie Mnemonic: Download the latest release from the GitHub releases page, extract it, and run start.bat to launch Charlie. Initial setup may take some time as it downloads necessary components.

Interact and Explore: Once Charlie is up, you can start exploring its capabilities through the browser interface it opens.

For detailed instructions on running, updating, and managing Charlie Mnemonic, visit the GitHub repository and its documentation.

How to run Charlie Mnemonic on Windows – see here.

Note: The current version of Charlie Mnemonic is meant for AI enthusiasts, and early adopters, who want to try long-term memory. There are some known bugs that we will solve in the following weeks.

Future work

This release marks only the beginning for Charlie Mnemonic. We are committed to its ongoing enhancement and have outlined a comprehensive roadmap for its future development:

- Expanding support to include various Large Language Models (LLMs).

- Integrating local LLMs within the Docker container for a seamless, out-of-the-box experience.

- Developing Charlie into a native application for desktop environments.

- Launching Charlie as a mobile application to ensure accessibility on the go.

- Enhancing integration with desktop environments, browsers, and various applications, enabling Charlie to learn more effectively and provide even greater assistance.

- Refining the LTM system to improve memory integration, retrieval accuracy, and support for complex scenarios where an assistant with LTM could provide significant value.

- Implementing multi-modal LTM, allowing Charlie to understand and interact through various forms of data and sensory inputs.

- Introducing Charlie in the Cloud, featuring end-to-end encrypted LTM for users who prefer not to run Charlie locally and wish to access it across multiple devices.

- Developing a commercial version of Charlie to ensure the project’s sustainability and continuous improvement. Our ability to further develop Charlie hinges on achieving profitability and financial sustainability.

Get Involved

The code and data for Charlie Mnemonic are provided under the MIT license, highlighting our dedication to open-source development and community engagement.

With the launch of Charlie Mnemonic, we’re not just introducing a cutting-edge AI; we’re also establishing a standard for Long-Term Memory (LTM) capabilities within personal AI assistants.

We encourage developers and AI enthusiasts to engage with Charlie Mnemonic. If you have insights or developments that could enhance its LTM capabilities, we invite you to contribute. Whether it’s through implementing new features or optimizing existing functionalities, your input is valuable. Please share your contributions by submitting a pull request.

Other related projects we work on:

- LTM Benchmark – To develop the best LTM system, we must measure its continual learning capabilities and compare them with others.

- AI People game – AI NPCs with long-term memory interact with each other and the environment.

Reach out to us if you are interested or want to collaborate on Charlie Mnemonic, LTM systems and continual learning:

- GoodAI Discord: https://discord.gg/Pfzs7WWJwf

- GoodAI Twitter: https://twitter.com/GoodAIdev

Leave a comment