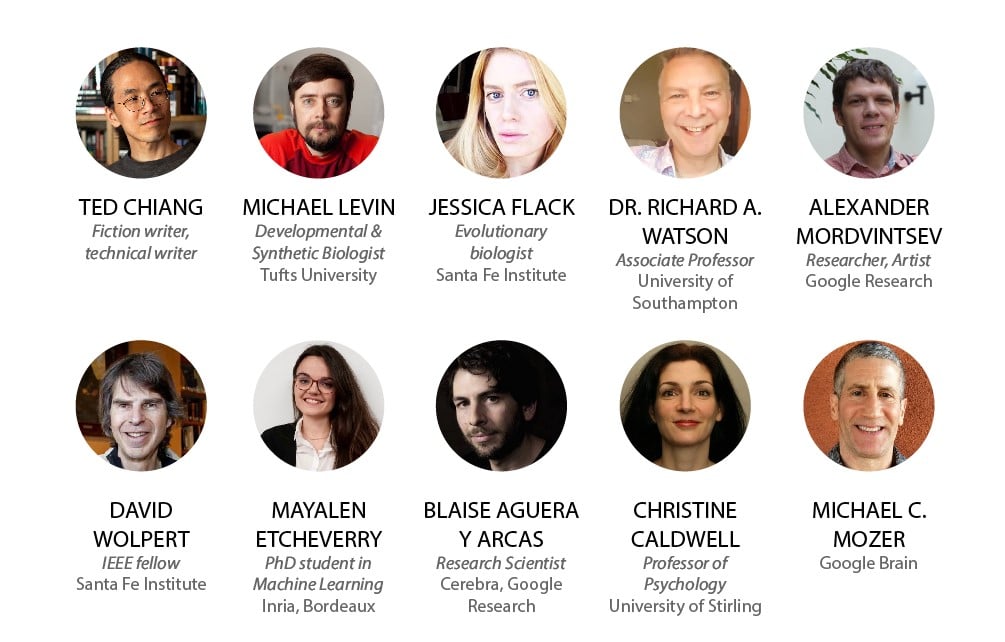

We recently co-organized a workshop with Google Research and the University of Chemistry and Technology in Prague for the 10th annual ICLR machine learning conference. Driven by the viewpoint that intelligence is collective, we brought together a panel of experts across a range of disciplines to investigate the fundamental properties of collectives and how this knowledge might translate to artificial learning advances.

Why collective learning across scales?

Made of parts, we live in groups in families and work in collectives. Yet much of the learning in machine learning continues to be carried out in isolation. We train our agents alone in their environments while feeding monolithic architectures huge amounts of data. Like teaching a child without parents, friends, and community, it’s ineffective and lacks something that makes humans very powerful: the cumulative nature of culture.

Our capacity to develop new ideas, materials, and technologies in a single lifetime is due to the ability of humans to pass on knowledge across generations. The phenomenon of cumulative cultural evolution is only a very specific example of why collectives might be interesting to look to when building artificial agents.

We can ask ourselves many questions that we still seek answers for, such as:

- What are the deep invariants across systems and scales?

- Which deep invariants would allow us to build systems that do not overfit, that are able to adapt to entirely new and unseen problems and environments, and that could eventually allow for open-endedness?

- What about interactions between systems and parts that give rise to dynamics that are too hard or even impossible to get in single, monolithic systems? For example when we have many interacting goals? What benefits do such interactions give us?

- And where do such goals come from?

Research findings presented through the lens of the biological sciences, physics, psychology, and literature uncovered patterns of learning through local interaction at varying scales. Topics covered delved into the parallels between biological evolution and learning systems, emergence and self-organization, the limits of optimization, and redefining what goals mean in terms of system behaviors.

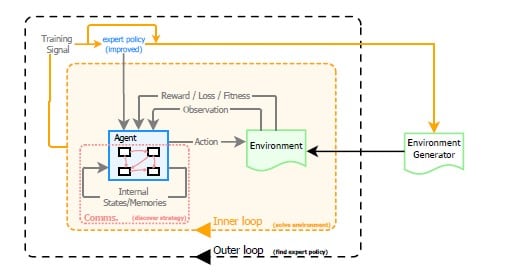

A full recording of the talks can be viewed through the ICLR website. Timestamps are provided in this blog post to demarcate the start of each individual presentation, together with a few takeaway points which we find inspirational in relation to our ongoing research with Badger, an architecture and a learning procedure defined by the key principle of modular life-long learning.

Badger architecture

Highlights from speaker talks

Christine A. Caldwell

Topic: The accumulation of intelligence in societies via cumulative cultural evolution

Video Link | Timestamp: 4:54:00

- Sometimes the learners’ errors accumulate and end up producing something that’s easier to learn. Cumulative culture is a very important characteristic of human collective intelligence, but it’s not a direct consequence of social learning: learners may need to actively seek out relevant information.

- There is a hypothesis that to have cumulative knowledge, one needs a model of one’s own epistemology, i.e. one needs to have the skill of knowing how to acquire the needed knowledge for the task. This postulates a minimal complexity level and necessary building block in order to get cumulative knowledge.

Michael Levin

Topic: The Wisdom of the Body: evolutionary origins and implications of multi-scale intelligence

Video Link | Timestamp: 7:29:00

- Biological systems solved problems long before there were neurons or brains — morphogenesis is proto-intelligence.

- Agency and intelligence are a continuum and built hierarchically from the self-organization and collaboration of dumber/simpler parts.

- Agency is very handy when it comes to predicting a complex system’s behavior i.e. it is often much easier to think about what the system “wants” than to model it with precision.

- Every level of complexity in multi-scale intelligence (e.g. from individual cells to the entire organism) has a set of competencies and solves a problem in a goal space. Higher levels translate their stresses (goals) into lower-level stresses (actions).

- Once the cells start sharing stress, the collective starts behaving as a whole. The optimal set point for every cell changes and it is the equivalent of a high-dimensional vector →it seems this is what gives rise to complex behavior and automatic division of work (modularity).

Blaise Agüera y Arcas

Topic: A multiscale social model of intelligence

Video Link | Timestamp: 8:52:50

- AGI is happening.

- Prediction is understanding.

- LaMDA (an unsupervised language model) passed the Sally-Anne test (theory of mind test) which small children and most animals cannot pass. This is super impressive and suggests that AGI could be achieved simply with language models even if they are ungrounded.

- Big shift: foundation models and unsupervised learning.

David Wolpert

Topic: The Singularity will occur – and be collective

Video Link | Timestamp: 8:21:20

- Flash crashes → Empirical proof of the power of collectives. A large set of extremely simple agents (e.g. “if sugar goes up, buy oil”) giving rise to extremely complex behavior.

- With many-to-many interactions, the reward space becomes complex and non-trivial (game theory).

Richard A. Watson

Topic: Natural Induction: Conditions for spontaneous adaptation in dynamical systems

Video Link | Timestamp: 1:11:40

- Adaptation can happen without selection.

- Dynamical systems with non-rigid relationships exhibit a form of memory.

- When constraints give way a little, the system falls in a different local minimum every time → natural exploration.

- Also similar to out-of-the-box thinking: constraints are not hard, but they always offer some flexibility, so the system can ignore them initially and restore them afterwards.

- Implicitly, systems with these properties discover correlations in the data. Once the number of interactions grows, correlations grow in complexity too.

- There are many scenarios in nature in which this kind of adaptation occurs. Selection might only apply to some elementary things when individuals compete with each other, while natural induction occurs spontaneously at the group level, without competition.

- Perturbations are key, so that the system has the chance to relax and visit new minima. This process of cyclic stress and relaxation is how the system finds very complex correlations, and learns.

Michael C. Mozer

Topic: Human and machine learning across time scales

Video Link | Timestamp: 5:19:00

- Human memory and forgetting are more rational than they seem — adapted to the structure of the world.

- Spaced repetition improves learning because the temporal structure of the world (when, how frequently things happen) contains hints about the relevance of objects and events and our minds exploit this information.

- Catastrophic forgetting in a sequential learning paradigm is a problem even in the case of humans. Example: student is attending the first class, then, before enrolling for the second class, he should recapitulate the knowledge from the first class, otherwise the knowledge from the first class will be forgotten. In general, a learner should invest ~50% of the time in repeating all the current knowledge. In that case, the knowledge is preserved, that is: “Catastrophic forgetting vanishes in structured environments.”

Mayalen Etcheverry

Topic: Assisting scientific discovery in complex systems

Video Link | Timestamp: 28:05

- Exploration of complex systems can be done by rewarding the agent for reaching nearby world states. Although we characterize these states as being close to each other, because the system is complex it involves a non-trivial sequence of actions to make the transition.

- Amazingly dynamic and robust results in Lenia cellular automata. Creatures are able to navigate, pass through obstacles and even reproduce. Framework looks a lot like childrens’ play, where the agent simply aims to see if it can reach new self-set goals.

Alexander Mordvintsev

Topic: Differentiable self-organizing systems

Video Link | Timestamp: 50:40

- We want to build programmable artificial life (ALife)

- Self-construction is one of the foundational skills

- Life builds complex structures from locally sourced material

- Simulations are costly and evolution struggles with too many parameters → go the opposite direction: make simulation units as close as possible to the hardware (so no additional simulation cost) and reduce the number of parameters to the minimum (few dozens).

Ted Chiang

Topic: Digient education: how might we teach artificial lifeforms?

Video Link | Timestamp: 4:31:00

- For intelligence to arise, we might need to start with an agent which exhibits characteristics of artificial life, and let intelligence emerge through processes akin to evolution and teaching in a similar manner as we teach children (which is something Alan Turing proposed already back in his original paper)

- We assume we get most knowledge from written text, however, it’s a recent development and 10K years ago you would learn through interaction with others. That’s also how other species learn. This can give us hints about mechanisms of learning.

- If we want AIs to develop common sense, we might think of embodying the agents in a simulated environment with a realistic physics engine, where they would be learning through interacting with others.

- Constructivism vs transmission model of learning: learning as an active social process, as opposed to learning as transmitting information to students which they passively memorize.

- Transmission model has been falling out of favor in schools, and isn’t scalable in humans, however that’s how we’re still teaching most of our AI. In humans, there isn’t a shortcut to instilling what we call knowledge. Maybe there isn’t such a shortcut for AIs as well?

Jessica Flack

Topic: Hourglass Emergence: How far down do we need to go?

Video Link | Timestamp: 5:41:00

- How far down do we need to go to explain and predict the macroscale?

- Physics derives its fundamental concepts via simple collective interactions whereas biology makes use of comparable collective concepts that are functional

- Physics produces order via energy minimization, adaptive systems produce order via information processing

- Complexity begets complexity. It is misleading to think that the microscopic is necessarily simpler than the macroscopic.

- We need to consider the system’s point of view

- How does nature overcome subjectivity generated during information processing?

- Endogenous coarse-graining between micro and macro results in an information bottleneck that is sufficient and suitable for predicting the macroscale without recourse to the microscale

If you are interested in any of the topics mentioned, have experience in the related field or believe you could contribute to it and would like to talk with our team, please contact us at info@goodai.com.

You can also visit the Cells to Societies workshop website to learn more and access the list of accepted papers and posters.

To date, most of what we consider general AI research is done in academia and inside big corporations. GoodAI strives to combine the best of both cultures, academic rigor and fast-paced innovation. We aim to create the right conditions to collaborate and cooperate across boundaries. Our goal is to accelerate the progress towards general AI in a safe manner by putting emphasis on community-driven research.

If you are interested in Badger Architecture and the work GoodAI does and would like to collaborate, check out our GoodAI Grants opportunities or our Jobs page for open positions!

For the latest from our blog sign up for our newsletter.

Leave a comment